This blog will be explaining negative SEO techniques that can pose a threat to websites so your SEO team can be prepared to identify these threats and defend against them.

Summary

- What are the most common negative SEO attacks that webmasters might encounter,

- How to detect these threats,

- How to defend and stay protected from them.

As many know SEO (stands for Search Engine Optimization), is the practice of improving a website’s content, structure and code to make it reach your audience and be more valuable for your users and more friendly for search engines to find, read and process.

But there is a dark side to it that is not so mainstream. There is a variety of unethical SEO techniques that spammers, counterfeiters and other bad actors abuse and that can pose a threat to any brands’ website.

Most of them fall under Black hat SEO and their goal is to get artificially high rankings in the search engine result pages very fast. These techniques try to find holes in the algorithm that Google and other search engines use to rank sites and exploit them in an attempt to game the system for quick gains.

Other less-known techniques are what we call “negative SEO”. These are still trying to game the system, but in a different way.

What is negative SEO?

Negative SEO is a set of unethical SEO tactics aimed at decreasing your competitors rankings, rather than improving your own.

This combined with black-hat SEO techniques can lead to counterfeiters outranking legitimate brands for their own products.

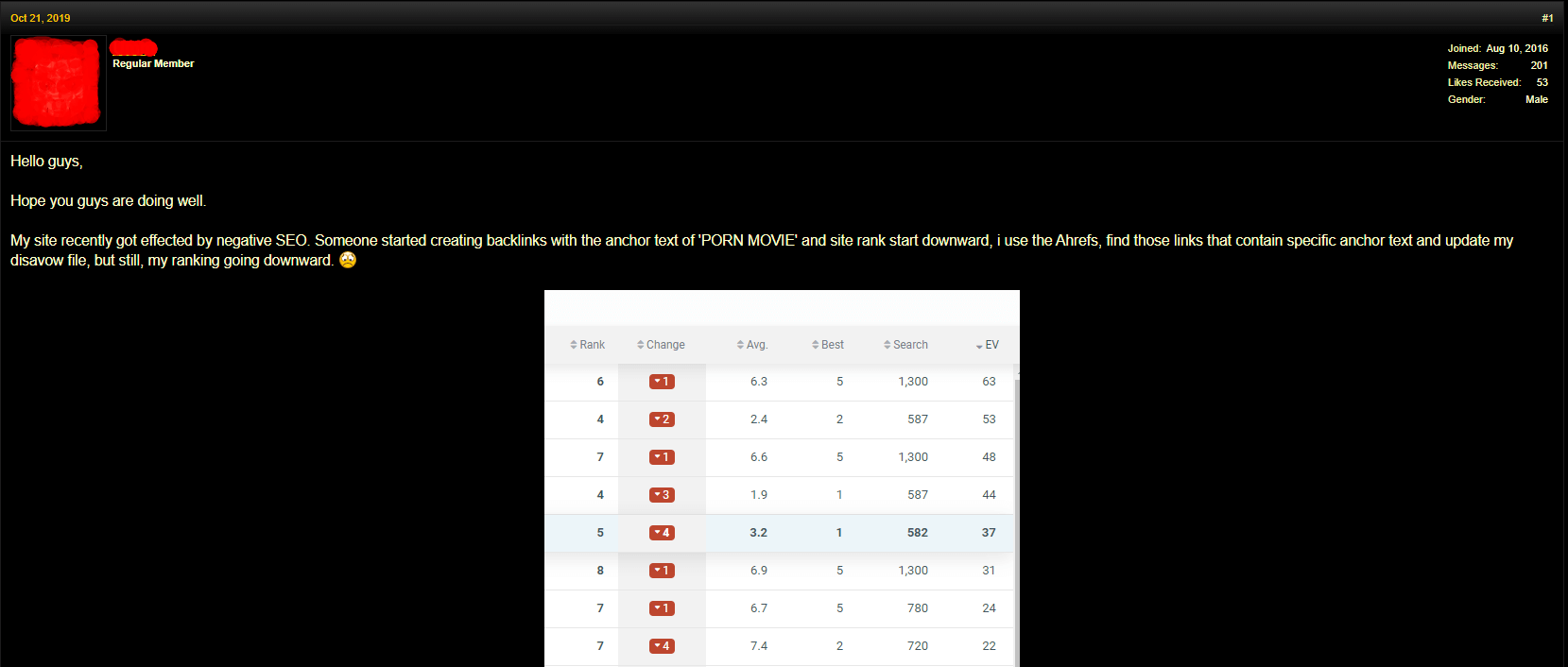

As the screenshot above shows, this unlucky forum member got hit by a rather basic negative SEO attack and was not prepared to identify it or defend against it, relying on other forum members to save his day.

Hopefully with the techniques I am going to list today, you will be protected against these kinds of attacks and have a solid methodology to keep your website safe.

Here you can find a Google Webmaster’s video as an introduction to negative SEO and some of the techniques that I will be sharing today.

Most used techniques and how to defend against them.

Link farms

This is the most basic kind of negative SEO attack that your can be targeted with. It consists of spamming a website by sending a big quantity of low quality links. The attacker can either be the owner of the spammy sites or pay third parties to spam your site on his behalf.

Link farms used to be a black-hat technique that worked, before Google Penguin 2012 update. Since then, Google has started to identify these kind of links and penalizes them, so this turned into a negative SEO technique in the hopes of getting the competition’s rankings penalized by Google.

To make this technique more effective, the attacker can spam exact match anchor text, and this could prove more difficult to find out without the right tools.

How to detect link farms

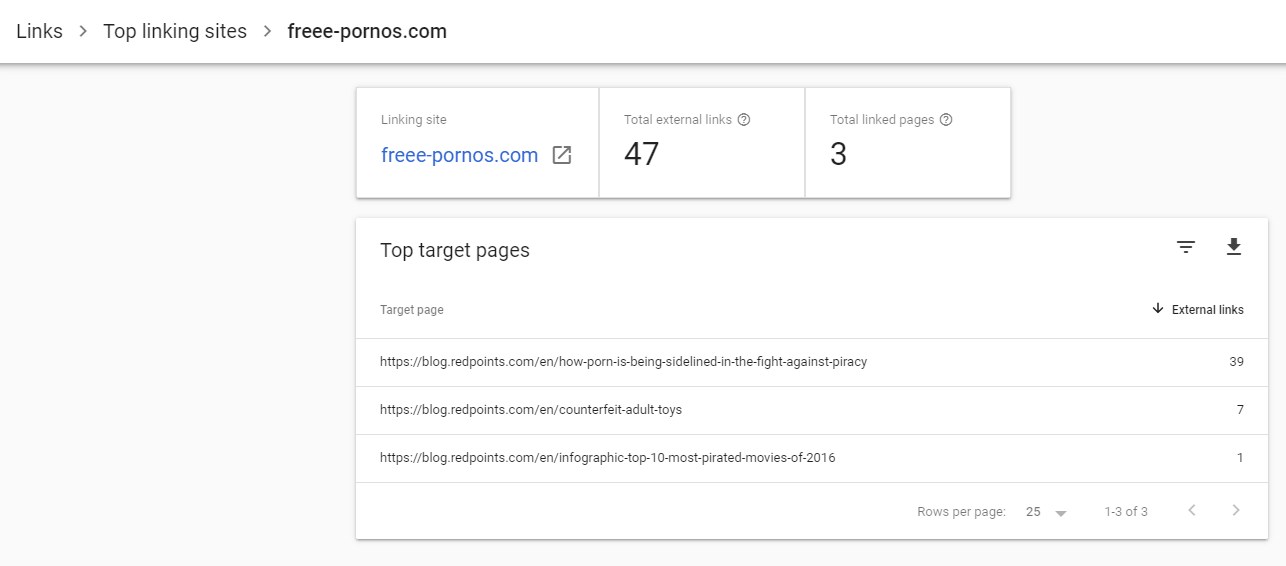

If you work as a Webmaster, or an SEO, you will need to pay attention to the links your website is receiving, this can be done on Google Search Console clicking on Links > Top linking sites and scrolling through them. It can be significantly easier if you have SEO tools that can track your links, as most of these tools also give you some kind of “link toxicity” metric.

The most typical examples of spammy and undesired links are:

- Porn websites,

- Spammy directories or forums,

- Many links with identical anchor text,

- Lots of links from one single domain to a single page on your site.

Here’s an example that I recently found for our blog:

If you have doubts about the legitimacy of any of your links you could also check their whois information to get a little bit of information on the organization or individuals managing the doubtful site.

How to protect your site against link farms

There’s only one solution to this problem and it is to disavow these links.

This is a rather easy process done in Search Console; you need to create a TXT file disavowing link from specific pages or whole domains and upload it to Google’s disavow tool, afterwards Google will not take those links into account .

The whole details for this process including formatting of the txt file can be found in Google’s documentation.

Content scraping

This is another quite simple negative SEO technique, but it can get your rankings hurt fast if you do not detect or react to it quickly.

With this technique, the attacker will be copying your content and pasting it unchanged in one or more websites, possibly into the link farms discussed above. Most likely this will be carried out by bots that automatically scrape and republish your content.

This may lead to duplicate content issues. On top of that, if Google finds one of the copies first, it may think that that is the original version.

How to detect content scraping

There are plenty of tools, such as copyscape, that allow you to search for copied content on the web to detect if you are being targeted by this technique.

You could also be alerted by Google Alerts or any other app/software that you use to track mentions of your brand or keywords.

How to protect your site against content scraping

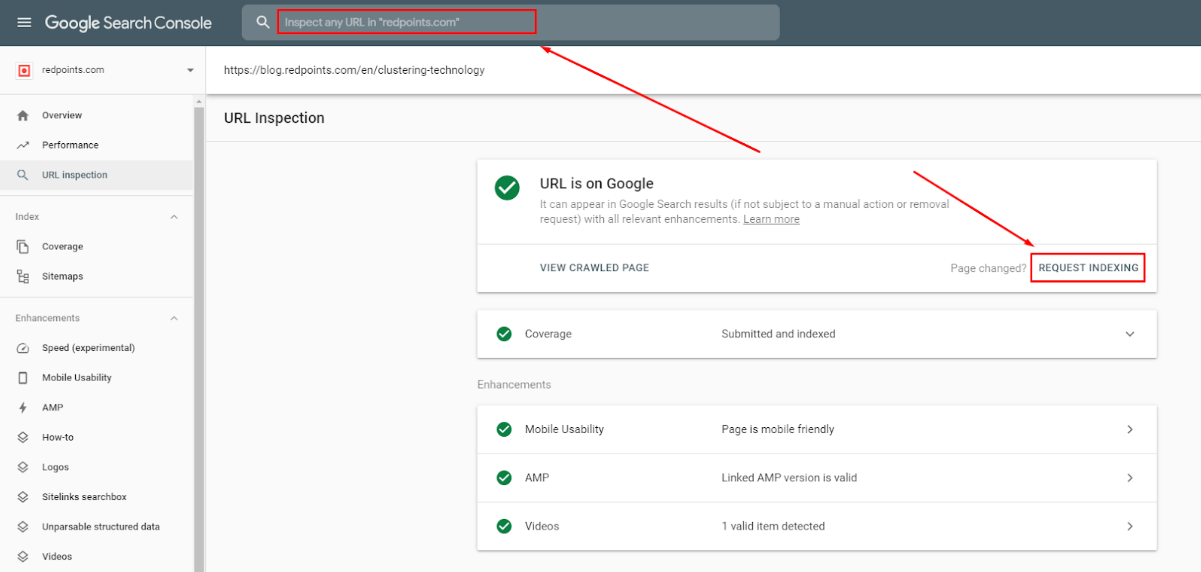

To minimize the impact of this technique you should make sure to be sending your content to Google via Google Search Console and request its indexation, you only need to insert the URL you just published on the top search bar and request its indexation, as per the screenshot below.

The only definitive solution for this problem requires a little bit more of technical knowledge and work, if you manage to identify the IP these scraper bots are accessing your site from you could block them entirely from your site. This will only last until they switch to a different IP though.

Server overload or excessive crawling

This technique is a bit more complicated than the previous ones, it involves using bots to access your site multiple times and overload your server with requests.

This will slow your site down, or worst-case scenario it could make the server crash and return 5xx errors. If this situation persists, it could mean that search engines cannot access your site and you could get delisted from the search results.

How to detect excessive crawling

You can detect this attack by monitoring the bandwidth consumed by your site, or if you notice a spike in 5xx server errors or recurrent site downtimes for this, you could use a tool such as Uptimerobot.

Some hosting providers may offer this monitorization and issue alerts, but it never hurts to take a peek yourself sometimes.

How to protect your site against excessive crawling

The first line of defense against this kind of attack should be your CDN, so make sure it’s set up. Due to how CDNs work, even if one node overloads, it would just relay traffic to the closest available one. This will still slow your site down, but it will reduce the risk of the site crashing altogether. If you’re interested in a more in-depth explanation of how CDNs work, please refer to Wikipedia, as CDNs are a whole different topic.

If this is a recurring problem, you should try to isolate the IP where this fake traffic is coming from and blocking it from accessing your site. Your hosting provider should be able to offer some assistance in pinpointing the range of IPs responsible for the attack.

Fake reviews

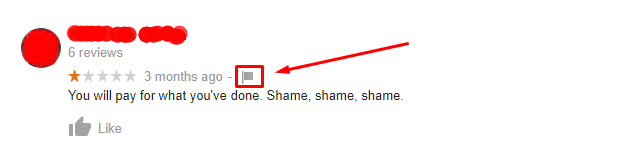

We have talked extensively about fake reviews in the past, but this time I am referring exclusively to the Google reviews that show on the search results and on Google maps.

Reviews are critical for local SEO, but even for bigger brands it is still a ranking factor, and probably one of the easiest to manipulate if you have bad intentions.

How to detect fake reviews

In this case there is no work around, you need to have your Google my Business profile set up and keep an eye on the reviews.

Some common traits that can identify fake reviews are:

- Too general, sometimes it doesn’t even name the product or service, e.g. “it was terrible”,

- Many similar reviews, all saying the same thing, or with very little variation,

- Grammatical mistakes,

- Length, a 3 word review is much more likely to be fake than a 3 sentence review,

- Looking at who is reviewing and what they reviewed in the past.

How to protect against fake reviews

Fake reviews are violating Google’s guidelines as they explicitly prohibit “post reviews on behalf of others or misrepresent your identity or connection with the place you’re reviewing” so if you are certain you identified fake reviews on your profile you shouldn’t have trouble getting Google to erase those.

To report a fake review, you just need to search for your company in Google and scroll through until you see the one you are looking for. Then, click the flag icon besides it and complete the report form.

Security breaches or hacked site

This kind of attack is way more refined than any of the previous ones, and if someone goes this far you should be worried for many more things on top of negative SEO. Realistically, it’s way above the average SEO’s skillset to protect your website from being hacked, but there are a lot of best practices that we can apply to increase a website’s security.

Some of the things that could happen if a bad actor takes ahold of your website are:

- Publishing new pages with spammy content,

- Modifying or deleting content,

- Adding outgoing links, this is especially dangerous if they hide it with display:none in the HTML,

- Modifying your sitemap or robots.txt,

- Adding no index tags,

- Redirections from your pages to external ones.

How to detect security breaches

Your best chance of detecting this kind of problem is to have a system that logs every single change made on the back office of your site and keeping an eye on it.

How to prevent security breaches

As I said earlier, it’s not usual that SEOs have cybersecurity skills. There should be specialized developers to take care of that work. Still, we can do a lot as webmasters to keep our website safe, such as:

- Using HTTPS,

- Keeping your CMS and plugin stack updated,

- Activating 2 step authentication, if you use WordPress,

- Using strong passwords and never write them down on paper,

- Keeping track of users and their permissions on your back office, Search Console, etc,

- Keeping watch on sections where users can comment or upload files. It’s preferable to request moderator approval for every single comment and especially uploads.

- Not showing too much on the error messages as this can give clues to how your infrastructure works to malicious users.

Conclusion

To sum up, there are a lot of things that you should keep an eye on to be safe from negative SEO, but with an established methodology and routine it can be a little task on your weekly schedule. The most important of them are:

- Requesting indexation of every page published or updated content you post.

- Checking the links you gained this week.

- Monitoring bandwidth consumption and uptime.

- Tracking alerts on your brand name and main keywords.

- Reading new Google reviews.

- Reviewing the website change log.

I hope this blog was useful to you and you learn about negative SEO threats that you may face and you feel confident now protecting yourself and your site against them.