While artificial intelligence has presented significant optimization opportunities – driving efficiency and opening avenues for revenue growth, AI tools also present significant challenges as malicious actors leverage this technology to bypass existing security systems.

From sophisticated scams that mimic humans on video to phishing emails that reveal sensitive business information, we’ll explore the rise of AI-powered scams and provide insights into effective business strategies for prevention and protection.

AI-powered scam techniques

Artificial intelligence has played a pivotal role in pushing the envelope on technology across industries, but its rapid advancement has also facilitated complex scams using technical AI-driven underpinnings. Here’s a detailed breakdown of how AI tech is applied in real-world fraud:

Machine Learning algorithm

Machine Learning (ML) Algorithms are designed to analyze data, learn patterns, and make decisions with minimal human input. They are trained on large datasets to uncover insights and make predictions. Specific algorithms can be exploited based on the type of scam a malicious actor aims to execute.

- Supervised learning: Scammers use supervised machine learning algorithms to analyze historical data and predict future outcomes. This technique is particularly exploited to mimic legitimate transaction patterns, allowing scammers to bypass fraud detection systems. A notable example is the use of machine learning in the banking sector, where fraudsters replicate spending patterns to make fraudulent transactions seem legitimate.

- Reinforcement learning: In reinforcement learning, algorithms learn to make sequences of decisions by continuously refining their actions based on feedback to achieve a goal. Scammers exploit this to automate systems that test different strategies and refine their approaches based on what is most effective at bypassing security measures.

Natural Language Processing (NLP)

Scammers use NLP to create and send convincing fake emails and messages. By analyzing vast amounts of data on user behavior and preferences, these AI systems generate personalized content that is incredibly difficult to distinguish from genuine communications. For instance, in 2020, an AI-powered email scam targeted several corporations, leading to significant data breaches. The emails were tailored to the interests and activities of individual employees, making them highly effective..

NLP can also create chatbots and dialogue systems to generate and copy the style and tone of legitimate entities. NLP often uses lexical, syntactic, and semantic analysis to break down language into a human-like, replicable formula.

Fake customer support bots, for example, are a common practice to trick potential victims into providing sensitive information or documentation under the pretense of a simple security check.

Often found on social media platforms, messaging services, or fake websites, chatbot scam operators use NLP algorithms to conduct authentic-looking automated conversations.

Computer vision

Computer vision enables machines to interpret and act upon visual data using techniques such as image and video recognition to recognize faces, text, and activities. Computer vision is a specific field in AI that uses machine learning and neural networks to teach computers and systems to see and think similarly to humans.

By manipulating this technology, scammers can create realistic but fraudulent media assets for social media and websites. Images and videos could be created to bypass current authentication measures or create convincing scams that replicate existing product lines and brands.

Deepfake videos, for example, can be created to impersonate both public and private individuals – spreading misinformation and swaying public opinion by placing individuals in compromising situations wherein they have to prove their lack of participation.

Social engineering bots

Social engineering bots use AI to interact with people in a way that manipulates them into divulging confidential information or performing actions that compromise security. These bots can:

- Simulate human conversations: Engage in realistic conversations to gain trust and extract sensitive information.

- Phishing: Use sophisticated tactics to convince individuals to click on malicious links or download harmful attachments.

Voice synthesis and cloning

AI can clone human voices to create convincing audio for scams. This can be used for:

- Voice phishing (Vishing): Impersonating trusted individuals or authorities to extract sensitive information or authorize transactions.

- Fake customer support calls: Scamming individuals by posing as legitimate customer service representatives.

Autonomous agents

Autonomous agents can perform tasks without human intervention, making them useful for automating scam processes. These agents can:

- Automate phishing campaigns: Send out large volumes of phishing emails with minimal human oversight.

- Credential stuffing: Automate the process of trying large numbers of username and password combinations to gain unauthorized access to accounts.

Behavioral analytics

Scammers can use AI to analyze and predict user behavior, tailoring scams to specific targets. This involves:

- Personalized attacks: Crafting scams based on detailed profiles of individual victims to increase the chances of success.

- Adaptive attacks: Modifying the scam approach in real-time based on the victim’s responses and behavior.

Case studies and examples of AI-powered scams

AI in scams has led to several high-profile incidents showcasing the potential danger and existing threats of artificial intelligence in fraudulent activities.

One of the earliest recorded instances of an AI-generated voice deepfake in a scam was in 2019 when the CEO of an unnamed UK-based energy firm believed he was on the phone with the chief executive of his firm’s German parent company. Following the orders of the deepfake voice, the CEO would transfer approximately $243,000 to a Hungarian supplier’s bank account.

A CNN report highlights the story of a finance worker at a multinational firm who was tricked into remitting $25 million to fraudsters after joining a video call with the company’s UK-based chief financial officer and other familiar colleagues.

A third, less-known scam is to create professional images and campaigns to be launched in minutes, reaching global audiences without a second thought. Scammers can simply create the description of a fake product and input that into readily available and easily accessible image generators to create legitimate-looking product listings.

Many of these fraudsters take this a step further and upload slightly altered images of genuine products from well-known brands. Fake websites, social media accounts, and emails can also be generated using NLP technology to appear legitimate to unsuspecting consumers.

From AI-driven phishing tools to customize unique messages based on known information about the target to realistic AI deepfake recreations of known colleagues and individuals to create seemingly real interactions, large operation scams like these use multi-layered tactics to scam individuals.

Risks and challenges of AI-powered scams

AI-driven scams not only result in direct financial losses but also have broader implications that affect businesses, individuals, and societal trust in technology. Here’s a deeper look into these challenges:

1. Financial loss

AI scams are incredibly costly, draining billions from the global economy annually. For example, according to a report by the FBI, business email compromise (BEC), often powered by AI technologies that enhance the believability of communications, resulted in over $1.8 billion in losses in 2020 alone. These scams are not only a threat to large corporations but also to small and medium-sized enterprises that may not have the same level of resources to invest in advanced cybersecurity defenses.

2. The erosion of digital trust

As AI scams become more sophisticated, they significantly deteriorate trust in digital platforms. A study highlights that a majority of the public is worried about the ability to distinguish between real and fake media, which could undermine trust in all forms of digital communications, from emails to video content. This erosion of trust can slow down the adoption of digital technologies, hinder digital communication effectiveness, and escalate costs for businesses as they invest more in verifying and securing communications.

3. Misinformation and manipulation

AI-driven scams often use tools like deepfakes to spread misinformation. Such capabilities can be used to create fake news, impersonate political figures, or manipulate stock prices by spreading false financial news. This type of misinformation poses a significant risk to the integrity of public discourse and can have real-world consequences, such as influencing elections or causing panic in financial markets. The rapid spread of misinformation facilitated by AI can outpace the ability of manual fact-checking processes to contain it.

4. Legal and regulatory challenges

The legal landscape struggles to keep pace with the rapid development of AI technologies. Current laws may not adequately cover the new forms of deception enabled by AI. For instance, the use of deepfakes in scams raises questions about liability—whether creators, distributors, or hosting platforms are responsible—and about the specific laws that such acts violate. This regulatory gap needs urgent attention to develop new legal frameworks that address these novel challenges effectively.

5. Drainage of resources

The need to combat AI-driven scams requires significant investment in both technology and human resources. Companies must continuously update their cybersecurity measures and train their employees on the latest scam techniques. This ongoing requirement can divert resources from other critical areas of business, impacting overall productivity and innovation. The cost of managing these risks is not trivial and can be particularly burdensome for smaller organizations.

How to combat AI scams with technology

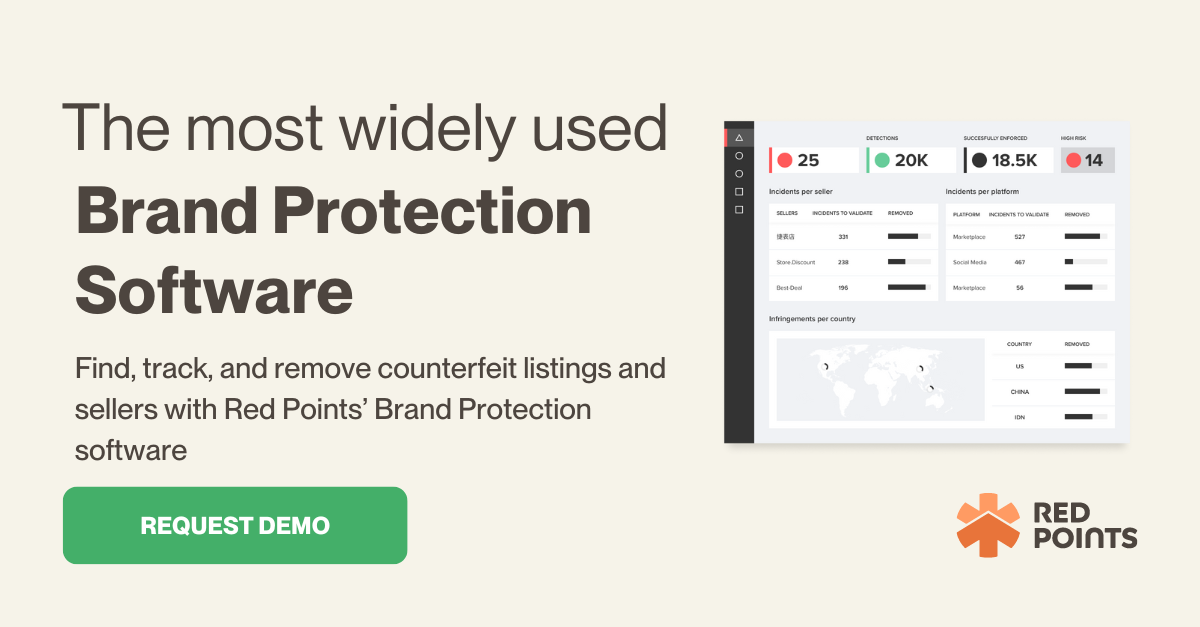

As AI continues to revolutionize industries, its misuse in scams presents significant challenges that can impact brand integrity and consumer trust. Red Points offers an advanced Brand Protection Solution tailored to detect, address, and mitigate the effects of AI-driven fraud and impersonation across a wide range of digital platforms.

AI-driven scams employ sophisticated techniques to impersonate brands, distribute counterfeit products, and manipulate consumers. Leveraging cutting-edge AI technologies like machine learning and image recognition, Red Points provides a dynamic defense mechanism that scans and monitors various digital channels continuously. Here’s how it works:

- Automated detection and enforcement: Red Points employs AI-driven algorithms to continuously scan the internet, identifying and initiating the removal of counterfeit listings, unauthorized sales, and brand impersonations. This automation ensures rapid response times, reducing the window of opportunity for scammers to profit from fraudulent activities.

- Expansive coverage: The solution covers an extensive array of online marketplaces, social media platforms, and domain registrations, crucial for brands with a global presence. This wide-reaching approach is essential to manage the international scope of AI-driven scams effectively.

- Customizable enforcement tactics: Red Points adapts its enforcement strategies to meet the specific needs of each brand. This includes a range of actions from sending cease and desist letters to filing takedown requests and, if necessary, coordinating legal actions against perpetrators.

- Real-time monitoring and reporting: Clients have access to a dashboard that provides real-time insights into detected infringements and the actions taken. This feature ensures that businesses remain informed about the threats they face and the effectiveness of the measures implemented.

What’s next

The expansion of AI in various sectors, while beneficial in many aspects, also amplifies the potential for sophisticated scams. Addressing these challenges requires a concerted effort from technology companies, regulators, and the global community to develop resilient cybersecurity strategies, robust legal frameworks, and effective public education to enhance awareness and preparedness against AI-driven threats.

If you’re interested in protecting your brand from AI-driven fraud and impersonation, request a demo today to connect with one of our experts.