Website scraping, or just web scraping, is by itself not an illegal act or a crime and, in fact, can be beneficial in certain situations.

Yet, bad actors can also use web scraping to perform various attacks on businesses and individuals, which can cause various negative impacts from financial losses, long-term reputational damages, and even legal repercussions.

For instance, cybercriminals can use web scraper bots to copy your content and publish it on another website while impersonating your business in an attempt to steal traffic and trick your website visitors into visiting this fake website instead.

This is why protecting your business from malicious web scraping is very important, and yet it can be easier said than done if you don’t really know where to start.

In this guide, we will discuss all you need to know about malicious web scraping and how you can protect your business from such attacks. By the end of this guide, you’ll have learned about:

- What is web scraping?

- What can be stolen in malicious web scraping?

- The anatomy of malicious web scraping attacks

- How website scraping can negatively impact your brand

- How to protect your business from web scraping

Without further ado, let us begin this guide with the basics: what is web scraping?

What is web scraping?

Web scraping, in a nutshell, is the process of extracting (scraping) data (in any form, be it text content, images, videos, codes, etc.) from a website.

Web scraping can be done manually. In the most basic form, right-clicking on a photo on a website, clicking “save image as…,” and then saving the photo file to your computer is an act of web scraping.

However, web scraping can also be done automatically with the help of programs and bots, and when discussing cybersecurity, typically, the term “web scraping” refers to the automated scraping of websites with the help of web scraping bots.

A web scraping bot is essentially a computer program that is designed to collect data on a website. In practice, websites come in many different shapes and forms, so there are also various different types of scraper bots with varying features and functionality.

In fact, many sophisticated hackers and cybercriminals built and run their own web scraper bots to ensure they can scrape data from a specific website as optimally as possible while ensuring it is not detected by cybersecurity measures especially anti-bot solutions.

How automated website scraping works

While there are various types of web scraping bots available in the market today, typically, they work while following these steps:

- The javascript web scraper bot is given the instruction to read one or more pages in a website, or some can extract the entire website, including CSS and Javascript codes.

- The web scraper bot will then either extract all the data on the instructed URL or only specific data selected by the user/operator.

- Once done extracting data, the web scraper will output the data in a presentable format that is understood by human users, for example, to an Excel spreadsheet or CSV file.

In practice, however, different types of web scrapers may use different techniques when extracting different forms of data from a website, for example:

- Using common Unix tools like Grep, Wget, or Curl to download pages. This is considered the simplest form of web scraper bots.

- Web spiders, such as Googlebot, visit your website and then find and follow links to other pages while parsing data. Spiders are often used for getting specific data together with HTML scrapers.

- HTML scrapers, for example, Jsoup, extract data from your web pages by parsing and detecting patterns in your HTML codes.

The legality of website scraping

Is right-clicking and saving a picture from a website illegal? Of course, the answer is no, and from this example, we know that web scraping on its own is perfectly legal.

However, web scraping can become illegal in several scenarios, including but not limited to:

- When it is conducted with the intent to extract non-publicly available data (unauthorized collection/viewing of information.)

- When the captured data (even if it’s publicly available) is used for malicious purposes, for example, extortion.

In short, when conducted without malicious intent, website scraping can be considered legal.

Malicious website scraping: Negative impacts

Bad actors may use web scraping bots with malicious intent, including but not limited to:

- Leaking confidential information: the perpetrator may gain unauthorized access to your unpublished information and then leak your business’s confidential information to your competitors or the public. This can cause your business to lose competitive advantage (i.e., leaked trade secrets) or damage your reputation. Your competitor might be the one performing the web scraping (or paying others to do so) for this purpose.

- Duplicate content: the perpetrator may extract unique content on your website (i.e., blog posts) and then republish it on another. This can create a duplicate content issue, which may damage your site’s SEO performance and may confuse your target audience.

- Data breach: your website may contain sensitive and/or regulated information, including customers’ personal information. If this data isn’t properly secured, web scraping bots can potentially extract this data and then use it for malicious means (i.e., using credit card information to make fraudulent purchases) or leak/sell this information to other parties.

- Denial of inventory and scalping: a unique type of web scraping bot can automatically purchase high-demand items or hold these items in the shopping cart, rendering these products unavailable to legitimate buyers (denial of inventory). The perpetrator can also sell the item at a higher (scalped) price after the product becomes scarce.

Excessive activities of web scraping bots on your website may also slow down your website’s performance negatively affect user experience, and may also distort your website’s analytics data (bounce rate, page views, user demographics data, etc. )

These are just a few reasons why you should protect your website from malicious and excessive web scraping as soon as possible, which we will discuss in the next section.

How to protect your business from malicious website scraping

With the state of the digital environment and cybercrime in recent years, unfortunately, 100% prevention of malicious web scraping from targeting your website is virtually impossible.

Yet, we can still be proactive to make it as difficult as possible for bad actors to perform web scraping on our website by strengthening three key aspects:

- Establishing a solid foundation on the website by optimizing structure, ensuring frequent updates, etc.

- Since web scraping is typically performed by malicious bots, a strong malicious bot detection and management solution should be implemented.

- Establishing automated monitoring for duplicated content and brand infringement and ensuring these pages get reported and taken down quickly.

Below, we’ll discuss them one by one.

- Establishing a strong security foundation

A key objective when aiming to prevent web scraping is to make it as challenging as possible for the web scraper bot to access and extract your data, letting the bot waste its resources and discourage the perpetrator from targeting your site. Here are some tips you can use:

- Don’t expose your dataset: don’t provide a way for these bots to access all of your datasets at once. For example, don’t publish a page listing all your blog posts, so users would need to use the search feature to find any post. Also, make sure not to expose any endpoints and APIs. Obfuscate and encrypt all your endpoints at all times.

- Honeypotting: another effective technique to protect your content is to add ‘honeypots’ to the HTML codes of your page’s content to trick the web scraper bots. A basic honeypotting technique is to add codes or content that would be invisible to human users but can be parsed by bots; then, you can make it so that when the bot clicks on this code, it will be redirected to a fake page serving thin/fake content to poison its data.

- Frequent update and modification: an especially effective technique to protect your website from HTML parsers and scrapers is to frequently and intentionally change your HTML codes and patterns. This approach is especially important if your site has a collection of similar content that may naturally form HTML patterns (i.e., regularly updated blog posts.)

- Monitoring and mitigating web scraper bot activities

The second foundation to preventing web scraping attacks from affecting your business is to monitor and mitigate activities from malicious web scraper bots.

You can either check your traffic logs manually (i.e., via Google Analytics) and try to identify signs of malicious bot activities, including:

- Users who are very fast when filling and submitting forms

- Robotic mouse-click and keyboard stroke patterns

- Linear mouse movements

- Repeated similar requests from the same IP address (or a group of IP addresses)

- Inconsistent JavaScript signatures like different time zones, inconsistent screen resolutions, etc.

Alternatively, you can invest in advanced bot detection and mitigation solutions that will automatically detect the presence of web scraper bots in real-time and on autopilot (won’t need human supervision and intervention.)

Once you’ve identified the presence of web scraper bots, there are several options you can try to mitigate their activities:

- Challenge: challenge the bot with a CAPTCHA or other challenge-based mitigation approaches. However, keep in mind that using too many CAPTCHAs may affect your site’s overall user experience. With the presence of CAPTCHA farm services in recent years, challenge-based bot mitigation techniques have also been rendered relatively ineffective.

- Rate-limiting: limiting resources served to these identified bots, for example, only allowing a limited number of searches per second from a single IP address. Bots run on resources, and the idea is that slowing them down and letting them waste their resources may discourage the bot operator from continuing to target your website.

- Blocking: In cases where you are absolutely certain that the traffic originates from a malicious bot, you can consider blocking its access to your website. However, it’s crucial to acknowledge that this might not always be the most effective approach. In some instances, determined attackers can adapt by modifying the bot, potentially circumventing your existing defenses like PerimeterX, which employs a sophisticated anti-bot system to block website access. Persistent attackers may find ways to bypass PerimeterX, resulting in a bot that returns even more sophisticated than before. The choice of the most suitable mitigation strategy should be based on a thorough assessment of your specific circumstances, considering factors such as the potential impact on user experience and the adaptive nature of bot attacks.

- Monitoring, reporting, and taking down fake websites publishing scraped content

The third foundation is to monitor the internet for the existence of your scraped content being published on another URL, then you can take the necessary actions to report and take down this content.

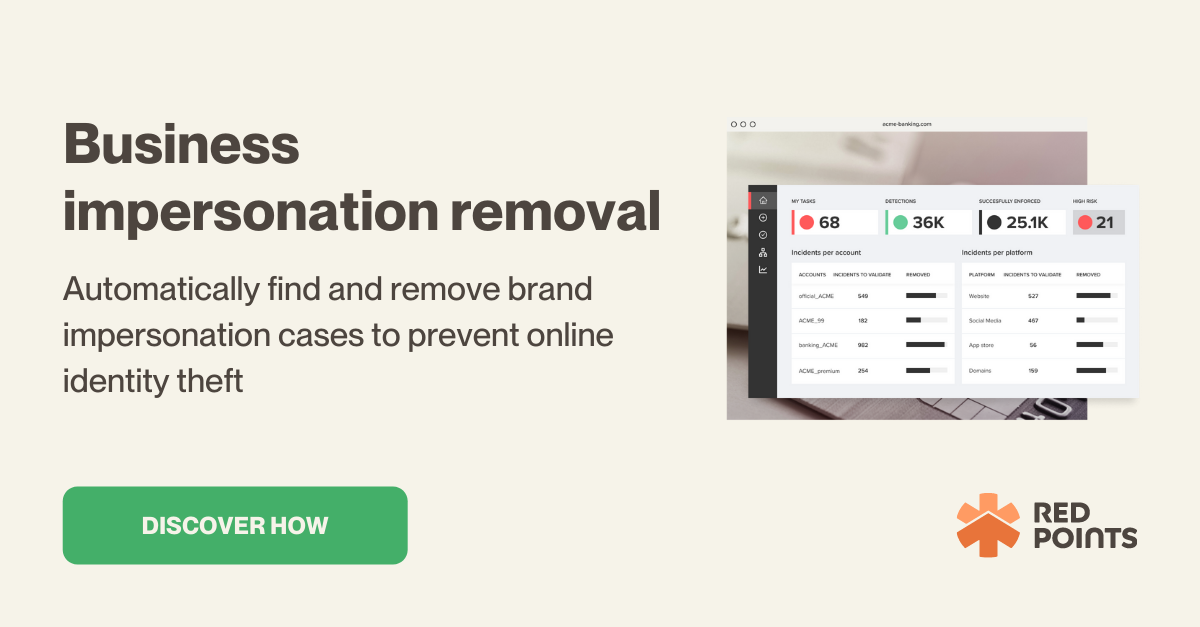

A more effective approach both in terms of accuracy and cost-efficiency is to use a dedicated website scraping and Copyright Infringement Monitoring solution like Red Points’.

Red Points leverages state-of-the-art technology to conduct real-time domain research and monitoring, so it will automatically detect any malicious web scraping attempt, notify you, and automatically take the necessary steps to take down the fake website so you can use your time to focus on your core business tasks instead.

When needed, Red Points’ Investigation Services can also collect data that might be used as evidence if you are taking legal action against the individuals or organizations performing the malicious website scraping attempt.

What’s next

While web scraping on its own is not an illegal practice and may benefit your business when done correctly, cybercriminals can use web scraping with malicious intent, which may have negative impacts on your business’s reputation and finances.

In this guide, we’ve shared all you need to know about how to protect your business from malicious web scraping. However, without a clear strategy, 100% prevention of these attacks can be very challenging, if not downright impossible.

Proactive prevention and protection of your brand by implementing real-time anti-web scraping protection like Red Points’ Impersonation Removal solution remains the best bet to protect your IPs, online content, and brand as a whole.