Artificial Intelligence (AI) is an innovation that has existed for many years. However, only now are many beginning to understand how significant a role AI may play in the world of business. In recent times, the emergence of natural language processing (NLP) programs like ChatGPT, powered by AI, has shaken up the industry.

ChatGPT may be exciting for many people, but because the program is driven by AI it also presents opportunities for bad actors looking to scam employees and customers. In recent months, ChatGPT and other AI platforms have become a resource for scammers seeking to enhance their phishing scams and their ability to impersonate individuals and businesses.

In this blog, we’ll be exploring how bad actors are using AI and ChatGPT to scam both employees and customers by highlighting a few topics including:

- How bad actors are using AI and ChatGPT to scam employees and customers?

- What can businesses do to protect themselves from bad actors using AI and ChatGPT to scam their employees and customers?

- How does Red Points protect businesses and their customers from impersonators and scammers?

How bad actors are using AI and ChatGPT to scam employees and customers?

AI presents a significant risk for individuals and businesses. The safety systems and regulations around AI are still in their infancy. As a result, there are many opportunities available for bad actors to use AI and systems like ChatGPT to scam employees and customers. Here are a few of the main ways scammers are using AI and ChatGPT to take advantage of their victims:

- Email phishing scams

Email phishing scams are a common underhand tactic used by scammers every day to steal sensitive information and direct recipients to dodgy websites. Now, with AI these scams have the potential to be even more convincing and therefore even more damaging to individuals and businesses.

AI platforms like ChatGPT can help bad actors improve the scale and sophistication of their email phishing scams. For example, with large language models like ChatGPT, a single scammer can generate thousands of phishing emails every day. They can then improve the sophistication of these emails by testing whether they are convincing or not.

Human-run email phishing scams are often plagued by errors in grammar and formatting. This makes it relatively easy for individuals and businesses to realize they are being targeted by scammers. However, AI-driven programs like ChatGPT are being used by bad actors to reduce these errors and create increasingly deceptive email phishing scams.

- Create fake websites faster

Scammers are using AI to produce fake websites at a greater speed than ever before. AI programs like ChatGPT can provide detailed instructions and guidance for creating new sites, but in the hands of a bad actor, they can generate fake websites much faster than they would if they did all the work themselves. This is ideal for scammers who want to create dodgy websites and populate them with content in order to pull off scams as quickly as possible.

The ability of scammers to create cheap and unreliable content that relies solely on AI technology puts both customers and businesses at risk. As it becomes easier for bad actors to produce fake websites quickly the amount of fake online content is bound to skyrocket. Unfortunately, AI has now made it more efficient and cost-effective for scammers to target you and your business.

- Impersonating executives

Bad actors are also using AI platforms to improve their ability to impersonate business executives. Despite the increased investment in security and compliance, much of the world of businesses is still based on trust.

A lot of trust is placed in executives and they also retain plenty of power over information and funds. This makes them attractive targets for scammers. Accordingly, the rapid progress of AI in recent years has encouraged some bad actors to use AI to poke at the vulnerabilities around executives. In particular, scammers have used AI to mimic the voices of executives on phone calls and to impersonate their tone of voice in emails, messages, and other communication.

- Business impersonation

Beyond impersonating individuals, scammers have also been using AI and programs like ChatGPT to impersonate businesses. The ability of AI to generate human-like answers is the main selling point for many bad actors. Using AI, scammers can create deep fakes and chatbots that enable them to create legitimate-sounding business-to-consumer interactions.

It is increasingly difficult for unwitting customers to spot the difference between authentic brand messaging and communication generated by an AI program that has been tasked with impersonating a real brand’s messaging style. This will inevitably lead to people falling victim to scams, losing money and exposing their sensitive information.

What can businesses do to protect themselves from bad actors using AI and ChatGPT to scam their employees and customers?

- Consumer education programs

Reach out to your customers and start educating them about the risks posed by scammers that use AI. If your users are informed about the risk of more sophisticated AI-powered scams they may be less likely to fall for them. If you let users become vulnerable touchpoints for scammers you will be exposing your business to more risks and losing customers to dodgy websites.

- Employee education

Along with educating consumers, you also need to keep your workforce informed. Teach employees at all levels about copyright infringement, ChatGPT exploitation, deep fakes, OpenAI, executive impersonation, and many other elements around AI-powered scams. Employee education will allow your business to remain vigilant in the face of scammers. An informed workforce will help to prevent scammers from identifying and taking advantage of weak points. You can consider using AI text-to-speech technology to create informative videos that will make the information more accessible and engaging for employees at all levels.

You can also conduct, and get involved in, more specific training based on phishing scams. AI and platforms like ChatGPT have made it easier to create convincing and widespread phishing scams. In response, businesses can protect themselves by becoming better at spotting phishing scams. Through training and repetition, you can improve your ability to recognize and take down any phishing attempts.

- Using an AI-powered monitoring tool

One of the best ways to proactively protect your business and customers from scammers using AI is to use Intellectual Property (IP) monitoring software. At Red Points, we have Intellectual Property Software, powered by AI, that enables you to automatically find and remove IP infringement to ensure you can safeguard your customers and profits.

How does Red Points save businesses and their customers from impersonators and scammers?

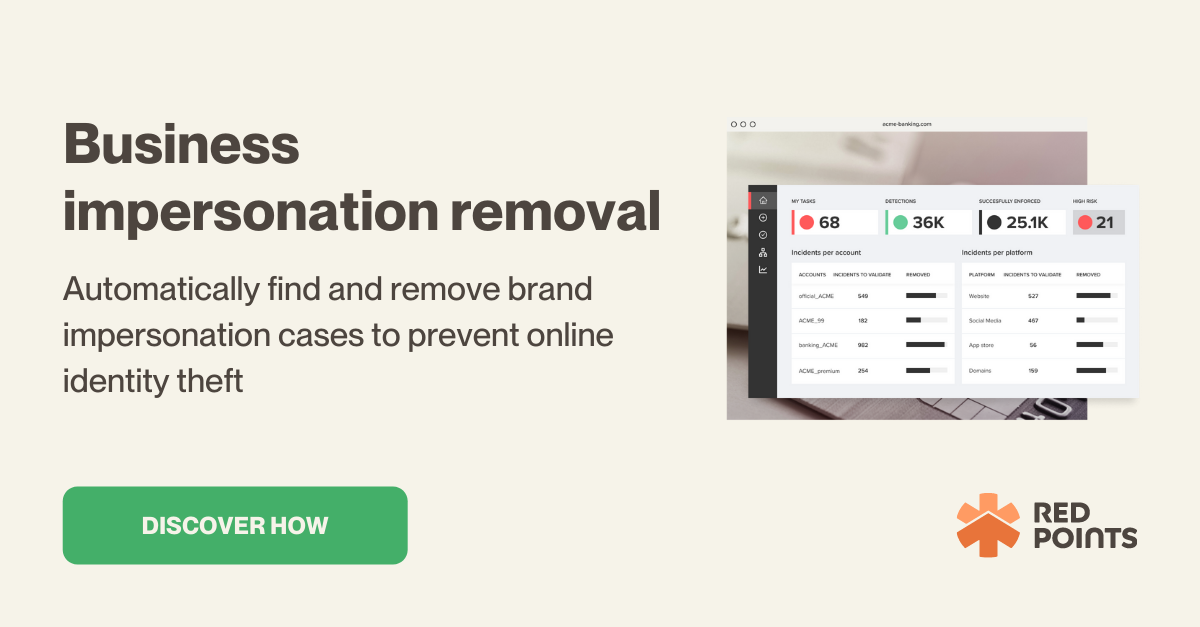

Red Points can save your business and customers from impersonators and scammers with our automated Business Impersonation Protection Software. This popular solution is designed to clean the web of scammers targeting your business and ensure that you can operate online without being threatened by bad actors using AI.

Our software achieves a stellar level of protection via 3 simple and effective steps:

- Detect and monitor

Our bot-powered brand abuse scan finds brand impersonators and scammers within seconds. We search across a variety of websites, marketplaces, and social media to ensure that bad actors using AI are uncovered.

Our machine-learning technology allows us to improve our detection process with every search, ensuring that your ability to find bad actors is continually enhanced. Once we have detected potential infringers we then monitor their online presence and enable you to determine whether you want to take action against them.

- Validate and enforce

We then enable you to filter through thousands of brand impersonators and prioritize what you want to pursue with further action. Our high-risk shortlist will present to you the bad actors that look set to cause the most damage to your brand. You will then have the power to validate potential infringers and kickstart the enforcement process.

Once you have done this, we will start the process on your behalf of removing bad actors that are putting your business and customers at risk.

- Measure and report

You can measure and review your impersonation removal progress within our platform. You can view both short and long-term reports that will enable you to view key progress indicators such as success rates and numbers of detections. Our reporting process grants you a micro and macro view of your coverage. This will allow you to improve the way you handle bad actors going forward.

What’s next

Bad actors are posing an increasing risk to businesses and their customers through their use of AI to power scams and impersonations. Whether it’s email phishing or business mimicking, AI and programs like ChatGPT are enhancing the ability of bad actors to create scams that are sophisticated and large-scale.

While it is important to educate your customers and employees about how to protect themselves from scammers using AI, the most effective way to protect them is by starting to use smart, personalized techniques that can protect your brand 24/7. To learn more about how Red Points can help you protect yourself from bad actors intent on using AI, request a demo here.